“The AI eventually begins making decisions based on the data analyzed and begins to wade through the torrent of images available in its repository selecting successful images for a hierarchy or production and re-distribution of usefulness within the sets of codes previously implied”

In what manner does a machine contemplate the visual identity and value of human life and at what point does it dictate those values back with enforcement? If ascribed a set of algorithms based on photographs and their hierarchical value, award-winning and iconic status, or simply “of higher value”, how does the computer organize its production of images when it is mandated to create these types of images based on existing data with loose or absent value systems? Further, how long do we allow the AI to contemplate these images? Do we shut it down when it arrives at the point of forming its own visual language as we have with the Facebook AI, which formulated its own inner communication and language system and was abruptly shut down by engineers?

“Computer vision” is an artificial intelligence field in which a computer or a series of computers analyze photographic data from large pools of individual photographs and by previously written code at inception organize those images into value systems based on success rate levels of distribution or merit-awarded imagery. Merit in this case being a journalistic hierarchy of publicly applauded or endorsed “icons”. It can work from a very simple code and sourced pixel-based imagery with shape, color and composition bearing weight on vector codes, which are collated into numerated attenuations of “successful” data. It can also formulate images based on very loose shapes and colors into a full photographic image without grounded subject matter-it literally paints in the details, no matter if they are based in reality or simulacra inspired. That is to be said, that it can hypothesize a photograph when coded to do so from very primitive sets of designs and data. The AI eventually begins making decisions based on the data analyzed and begins to wade through the torrent of images available in its repository selecting successful images for a hierarchy or production and re-distribution of usefulness within the sets of codes previously implied. It in effect qualifies what may be determined as “successful” for specific target groups or manufactured intent within to produce new imagery from a large archive of pre-existing photographic images. These presets are at inception human-made. They are agreed upon through contests, relative agreement, awards, newspaper distribution, frequencies of similarity to other images and other methodologies that study what an images weight or impact upon the world, but also images of the mundane in nature which would be avoided. Computer Vision programs work mathematically based on the aforementioned presets and order their value system based on composition, sometimes racial identity of sitters and do so through various technological mathematical processes based on pixel registration and code until such a time they may expand the pre-set to automation and control.

Fear of De-Humanizing Populations Through Hierarchical Order.

I once joked, “Democracy begins with producing images”. I had found an image of a white western man showing a Massai elder how to use a camera in the 1930s. The aside was a pointed study on the ideas circulating around oppression, control technologies, and colonialism. It was a simple aside, but historically, not such a simple aside. Images and science have done a great deal for humanity, depending on who controls them and what the propaganda has called for. Every image is propaganda. This is to assert that one need not fear an image and technology per se, but rather that the hierarchy in which they are conditioned and re-distributed and with what purposes sought may determine public opinion, but also legislature and political systems, tyranny or other.

If one considers the base pre-text of racial classification systems within the oppressing of people discriminated by values of race, skin color or culture, one need only head backwards to the Mid-Nineteenth Century during the crucible of evolutionary thought. Alfred Lord Russell, Charles Darwin, Jean-Baptiste Lamarck and Franz Joseph Gall to see how the identification of an image within a hierarchical system paved the way for manufactured racism and hierarchies of power, class and educational, both colonial and domestic. This further led the way to Julius Streicher’s “Der Sturm” and a myriad of other publications in which images, photographic or other, were purloined to inherently classify the “other” as lesser and to pursue and ideology of collective immolation of their target group during World War II. It did not stop there. The savage, noble or not was examined, photographed and large tracts of racist pillorying ensued. You might ask yourself why I am bringing up race while speaking about AI and Computer Vision? You may assume that my instincts for de-advocacy is to speak about how Kodak film stock was inherently racist or how certain examples of AI also induce racism by programming examples such as the famous case of Google AI mistakenly tagging black people as gorillas within the past few years. The algorithms themselves being not overly concerned with race, but have rather been predicated simply by an inherent language of hierarchical distribution historically (human-made) and in an unsurprisingly racist language. Technology and racism have many chapters.

So, my question with all of this is not simply the problems of AI and racism, but rather the question of the distribution of hierarchies of images as AI progresses at a speed that we cannot fathom towards producing its own images. AI’s learning apprehension for one week is allegedly something to the effect of 20,000x that of its human counterpart. This means that morality is not a function of its genus at inception or collation and that the fail safe for the calculations concerning its thoughts on the human species have no red button from which it will stop its replication, nor its distribution of AI induced language, nor its hierarchies of value. It extrapolates from data-a sort of bit by bite Nostradamus in which the pre-sets for ethics and morality seem to be missing in large amounts of discussions surrounding AI and the fabricated image.

But Do Machines Argue?

You might ask and it’s a good question. Imagine a human society in which the divisions of race, gender, and the general nausea-induced pandemic of religion do not qualify for decisions made affecting the population or the way in which it distributes problem-solving techniques. Is it sounding more ominous yet? Imagine that the AI using its Computer Vision has extrapolated data by observed measurement…”the hoodie is dangerous”, “the shoes suggest law-abiding wealth”. It doesn’t take long for the control mechanism of visual hierarchies with an advanced system whose ultimate goal will, as no longer pursued by its creator, be able to “think 2.0” for itself and execute real-time decisions based on a previously uploaded set of codes, before it takes the next step to make decisions based on an image by itself and to distribute its problem-solving solutions, which are increasingly difficult to distinguish from the human per(IN)ception of “reality”. It is impossible to understand the consequences, but if it functions on base survival or super skills of “progress” for itself, which will be its base condition, then it will visualize and target unnatural, unnecessary, or simply annoying humans from its objective by its image apprehension and re-distribution alone. Who will have a place in the new frontier-ism of manufactured imagery and will be able to de-code it? What happens next is conjecture, but objectively thinking, why do we need anything less than a society of uberfraulein and mensch, if at all? Nano-Technology is the perfect sterilization vehicle-painless, no clean up and un-acknowledged at the point of incision and just like drones are easily manageable and breachable by super-technologies. Where does this leave things? The AI scorecard. Your image rated as destiny for simulacra re-distribution of self at the point of singularity and ecological collapse, the paradigm of decline re-manifested in an extra-dimensional simulation. Your meta-self will be been collated and colonized by AI and your hierarchical value, based on image alone (and perhaps your bank account and criminal record), the surface of you if you will, will be the way in which you are merited or de-merited by AI in the empire of new images.

Humans have shut down an AI system that invented its own language, but did not shut down Computer Vision, which replicate images of everything. Why? Short-sighted human behavior based on the fallacy of control and the possibility of software being co-opted, like WWII coding machines by opposing humans does not limit the excitement enough of a machine creating our collective and plausible fascination. These images will be collated into a separate dimension where, like avatars human “existence” will continue, albeit by a proxy dis-continuation of able will, materiality and serendipity. It will format to play itself out based on images collated in the present under the guiding hand of software and the human needs to display ego through image. Grand Theft Auto, Avatar, Second Life are going to bleed from the edge of the screen when optics take a U-turn past the overly expensive Google glasses to bio-interface with humans. This is already happening. So, imagine a world in which your Lasik gets and upgrade of possible VR realities.

“Your meta-self will be been collated and colonized by AI and your hierarchical value, based on image alone (and perhaps your bank account and criminal record), the surface of you if you will, will be the way in which you are merited or de-merited by AI in the empire of new images”

Does She Speak?

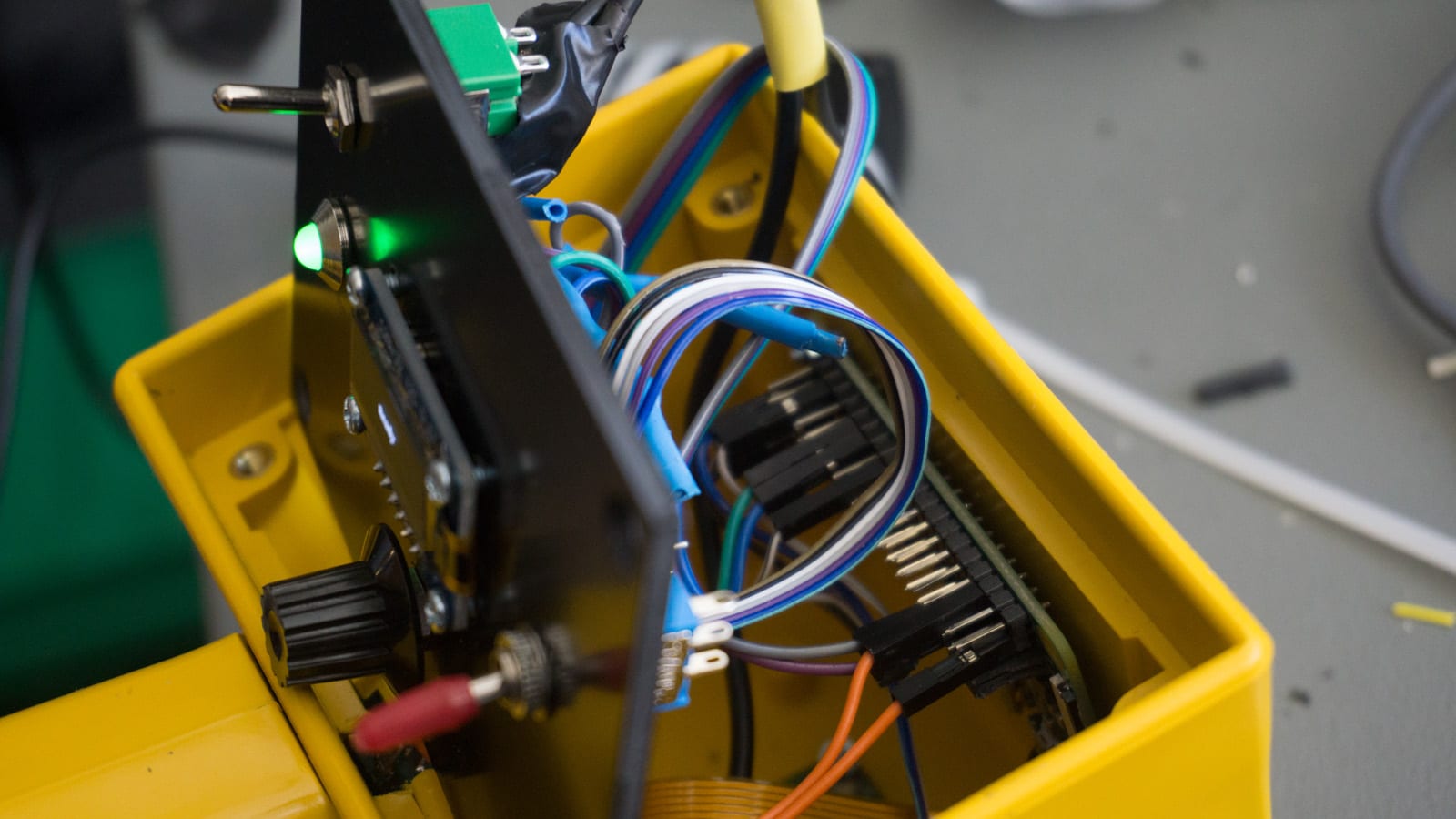

Max Pinckers and Dries Depoorter have decided to investigate the possibility of what a purpose built algorithm-based system could do if it examined the history of award or prize-winning press photographs from 1955 to present. The camera has been designed to acknowledge these images and look for similar possibilities live-streamed from a particular location. The design is an edit-based system over that of a production mechanism. This means that it looks at information that is submitted in front of its lens that may qualify for an exceptional photograph based on what it has been given based on the previously mentioned set of press photographs mentioned above, which needless to say is a much smaller pool than exists overall. The idea is that the camera is triggered by its data set to perceive what would be an exceptional image. It is not overly sentient in this sense, but points to a possibility that I have outlined above. The next step for this, based on Computer Vision ideas would be to fabricate images based on these sets of pre-sets of images. Pinckers’ and Depoorter’s camera is specifically human-made and its purposed is for a dialogue between pre-existing images in the hierarchical scale with the added performance dynamic of a location and a subject pool that is pre-ordained, in this case the museum environment of FOMU in Antwerp. The point of my thoughts on this project are more about the possibilities of such a technology, but also the questions posed as to who sets the code and to what purpose and to what possibility this sort of concept can be furthered for better or worse.

The camera itself is, by technological standards somewhat primitive. The project is collaboration between two white male art-minded people. I could suggest that there is more behind the creator than the AI/machine itself, but for the purpose of this article, my interest is the broad spectrum in which technology such as this may be harnessed, but also what it does if it embarks on a circuit of complete autonomy. The questions posed by Pinckers and Depoorter far exceed the probability of what is on view. That is not to take away from its output. It’s real output is the fingerprinting of the future of images. The fact that the creators have gone as far as they have exemplifies the possibility for technology, like citizen journalism to enter into an engagement with the possibility of AI and photography that leaves more questions than answers, but is foreshadowing grave possibilities for our future.

Max Pinckers and Dries Depoorter

(All Rights Reserved. Text @ Brad Feuerhelm. Images @ Max Pinckers and Dries Depoorter.)